12+ Reasons to Donate to ClusterFree

Why cluster headache mitigation should become your #1 effective giving priority this Season: impactful, novel, very alive, and with plausible fast results!

By Andrés Gómez Emilsson, ClusterFree Co-Founder & Member of Advisory Board

TL;DR: To motivate action and feel genuine internal alignment around a decision, sometimes we need to see it from many different angles. Even when a single reason should be enough, we need to motivate our entire internal coalition of subagents! Hence, all of these reasons to support ClusterFree in its mission:

Summary of the 12+ Reasons to Support This Cause

- Watch real people rapidly improve – Video testimonials of torture stopping in minutes

- Logarithmic scale of impact – Helping someone with this condition is potentially one of the highest-leverage interventions anyone can do as a gift to someone’s life

- Insurance against illegible suffering – Building a world that takes invisible pain seriously, including your own in the future! (crossing fingers you never experience such things!)

- Proof-of-concept for valence-first cost-effectiveness – This illustrates the corner cases where QALYs/DALYs fail catastrophically

- Intellectual coalition – Scott Alexander, Peter Singer, Anders Sandberg, Robin Carhart-Harris, etc. have seen the evidence and are convinced this is real

- Schelling point for suffering reduction – Network effects for future high-impact work, attracting genuine talent to focus on deep suffering reduction is its own value proposition

- It’s a strike against medical paternalism – Informed consent for known therapies, even when not officially approved, when it comes to extreme suffering, should always be an option on the table

- Actually tractable – Success looks like a 3-5 year timeline with a clear theory of change

- Speed cashes out in suffering prevented – 70,000 people in extreme agony right now, every day of delay matters greatly

- Works as an accelerant for an existing movement – Adding coordination to grassroots momentum that’s already underway (giving the psychedelic renaissance wings!)

- Psychospiritual merit (if you believe in “karma”) – Buddhist texts specifically highlight headache relief, “immeasurable merit” in store for you and your loved ones if you decide to help with clean intentions

- Bodhisattva vision – Practice looking into darkness without flinching

- Bonus – I’ll stop talking about Cluster Headaches in Qualia Computing!: Fund it so I can get back to core QRI research

Introduction: Why Multiple Reasons Actually Matter

In principle, deciding where to donate should be straightforward: calculate expected value, fund the highest-impact opportunity, done. In practice, we’re coalitions of subagents with different reward architectures, time horizons, epistemics, and thresholds for action.

At a neurobiological level, motivation doesn’t work the way we pretend. It’s not about “willpower” or “being convinced by good arguments.” Different brain regions make “bids” to the basal ganglia, using dopamine as the currency. Whichever region makes the highest bid gets to determine the next action. Scott Alexander explains this in Toward A Bayesian Theory Of Willpower (2021). What we call “motivation”, within this framework, is just whichever subsystem’s bid is currently winning. Whether the details are right or not, I think this tracks how I see people behave.

If you want to trigger high-effort action, giving just one reason may not be enough. That only raises one bid. Layer multiple kinds of reasons (emotional, moral, social, self-interest, narrative, identity-based), and you multiply the bidders in your internal parliament. Scott uses stimulants as an example: they “increase dopamine in the frontal cortex… This makes… conscious processes telling you to (e.g.) do your homework… artificially… more convincing… so you do your homework.”

Look, I’m being straightforwardly manipulative here. Giving you twelve reasons instead of one is designed to activate more of your subagents. But it’s prosocially manipulative – to help you integrate a truth you might already intellectually accept but haven’t acted upon yet. The bullet point approach can be misused when it obfuscates (think laundry list of complaints when there’s really just one big issue), so let me be meta-transparent: I genuinely believe ClusterFree is extremely high-impact, and I’m deliberately structuring this to get past your action threshold. If any one or even several of these reasons feel less convincing to you, ignore them. The robust core case stands on its own.

There’s also the threshold problem. In Guyenet On Motivation (2018), Scott discusses how higher dopamine makes the brain more likely to initiate any behavior. When dopamine is low, even strong reasons may not overcome inertia. Increased dopamine “makes the basal ganglia more sensitive to incoming bids, lowering the threshold for activating movements.” Sometimes what’s needed isn’t better arguments but enough energetic activation to allow any reason at all to push action over the threshold. Which is why you should read this while high on LSD and/or Adderall fully rested and energized.

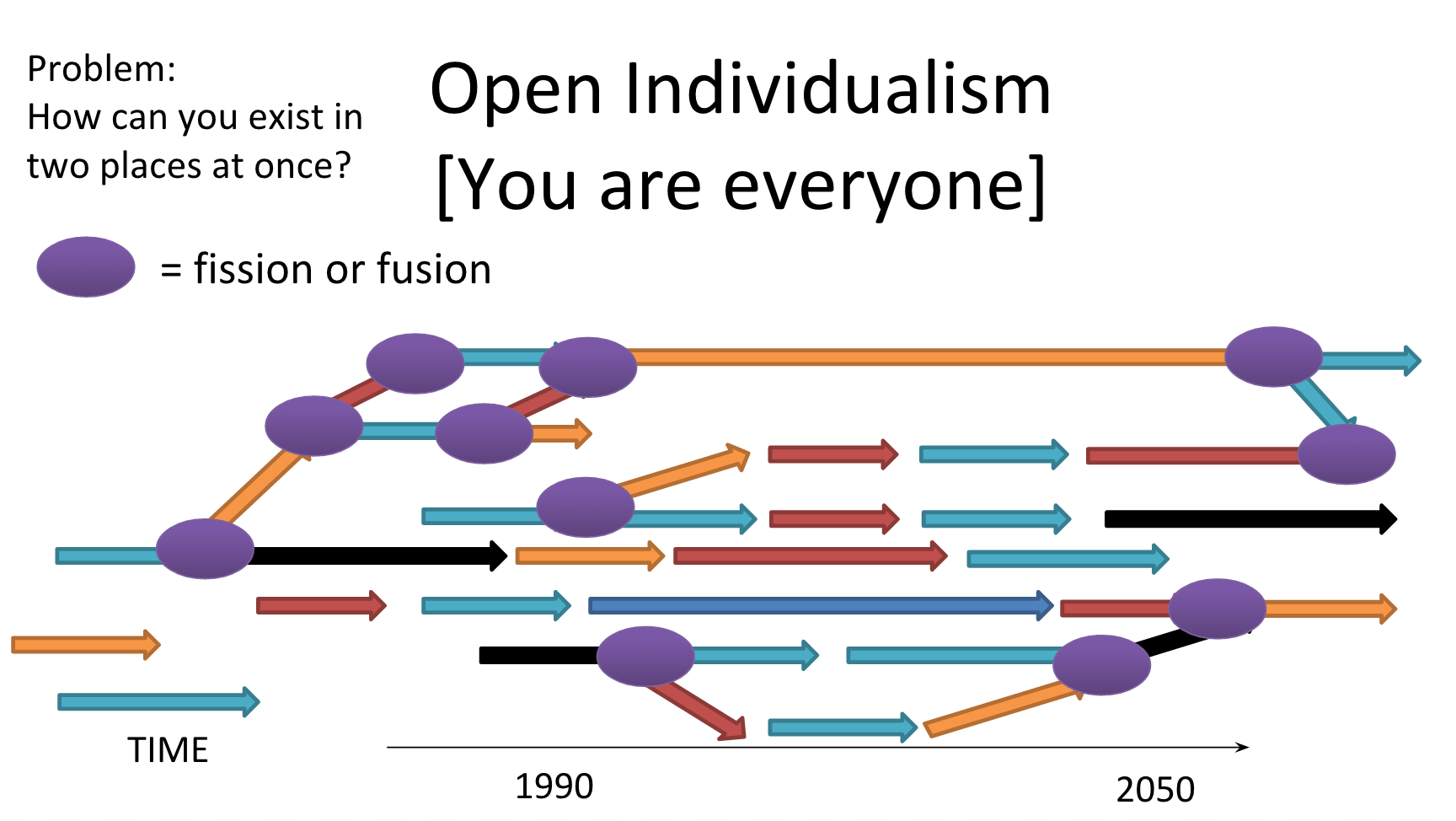

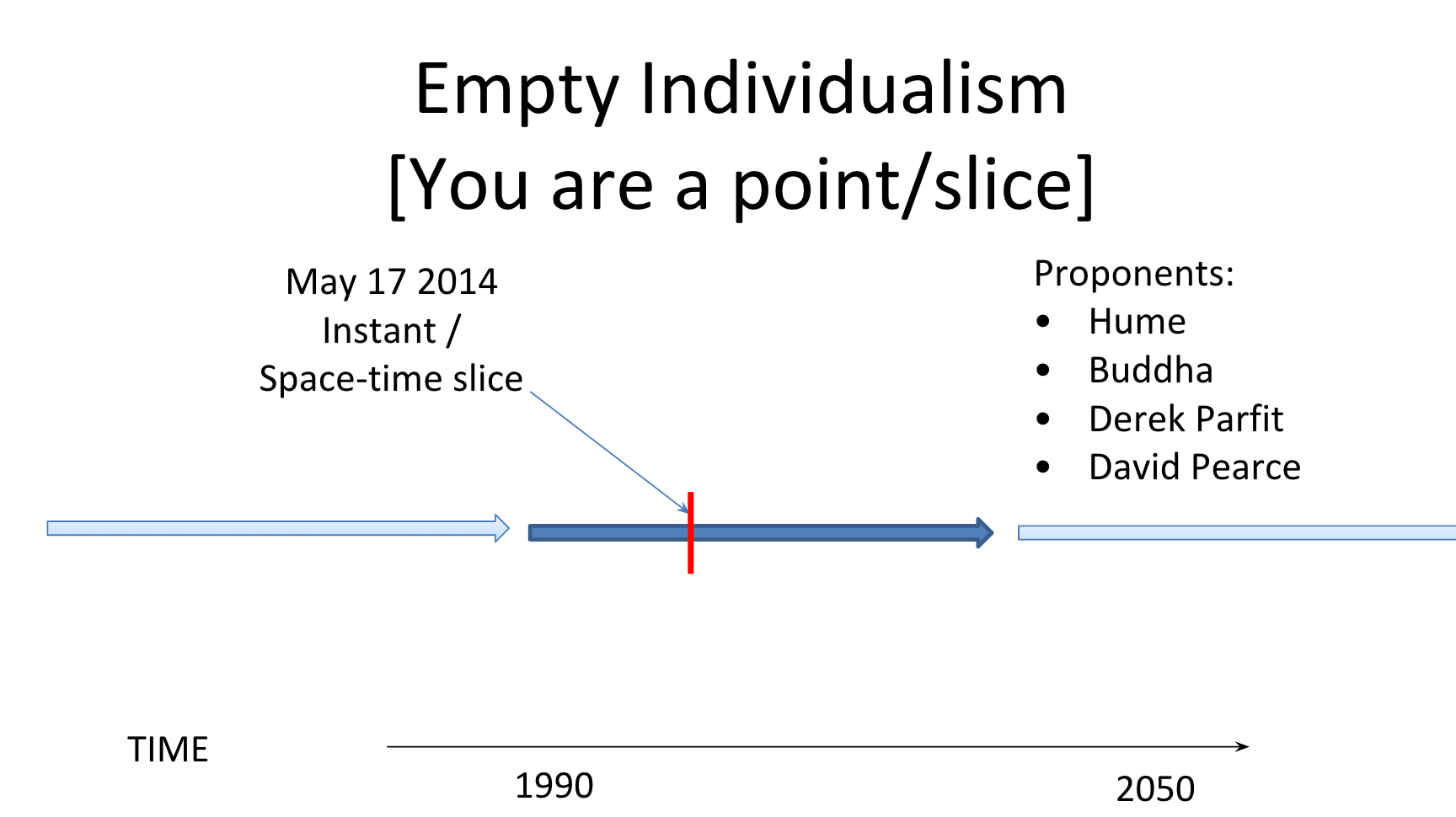

Naturally, this connects to annealing. At QRI, we think of belief updating as requiring an energetic process. It’s not enough to know something matters; you need metabolic resources to actually integrate that knowledge and reconfigure your behavior accordingly. The REBUS (RElaxed Beliefs Under pSychedelics) framework applies here: people intellectually understand that cluster headaches are astronomically bad, that preventing them is extraordinarily high-leverage, and that this is one of the most intense forms of suffering you can and should urgently address. Yet this knowledge may remain compartmentalized and inert, unable to meaningfully shape action, resembling other “ongoing moral catastrophes” by which future generations may judge our society.

What breaks through? Multiple simultaneous channels of evidence that together cross energy thresholds. Emotional resonance. Social proof. Narrative coherence. Personal connection. These aren’t redundant: they join together as a gestalt that pushes forward the energetic budget needed for actual system-wide updating.

So here are the twelve reasons to support ClusterFree. Not because you need all twelve to “get it” intellectually, but because different reasons will activate different coalitions in your brain.

And if you’re not in a position to donate but still want to help – please keep reading. There are many high-impact ways to contribute at the end!

1. You Can Actually See People Rapidly Improving

Most charity is abstract. You send money into a statistical void and trust the meta-analyses.

With ClusterFree, you can watch video testimonials of actual people describing how psilocybin or DMT stopped “the worst pain imaginable” in minutes. The person who was screaming, punching walls, and contemplating suicide is suddenly calm, coherent, and alive again.

Watching someone’s face change like that hits you differently than reading a cost-effectiveness analysis. Your brain gets direct evidence of the state change. You see the suffering stop.

And strategically, patient testimonials are how this actually works. Raw video testimonials of “this stopped my torture” create demand that no institutional gatekeeping can fully suppress. People are already using this in advocacy. We’re just collecting the stories systematically and making them impossible to ignore. One major medical center sees enough of these, runs a supervised protocol, publishes clean results, and every other institution’s liability calculation flips.

2. On the Logarithmic Scale of Helping Another Human, This Is Unfathomably High

Preventing cluster headaches for life is plausibly one of the single largest “good deeds” a human can do for another human being. Yes, this is grandiose. But if something big IS true and you know it, pretending it’s not to avoid looking grandiose is fake humility that damages the cause.

Cluster headaches are called “suicide headaches” because the pain is so extreme that people actively contemplate ending their lives during attacks. Patients report “drilling through my eye socket,” “being stabbed in the brain,” “pain so bad I can’t think, can’t speak, can’t do anything but scream.”

Here’s a rough intuitive sketch of what the logarithmic scale of helping another person might look like (this isn’t rigorous math – it’s an illustration of what’s likely the case, directionally right[1]):

- 10^0: holding a door open

- 10^1: gifting a pen

- 10^2: introducing them to someone useful

- 10^3: helping them move places

- 10^4: catching a major work or family mistake before it ruins their week

- 10^5: teaching them a compounding skill (meditation, programming, emotional regulation)

- 10^6: funding their higher education, changing their entire socioeconomic trajectory

- 10^7: helping them escape a pathological family system

- 10^8: preventing them from falling into a cult, deep addiction, or abusive relationship

- 10^9: curing a chronic condition like treatment-resistant generalized anxiety disorder (GAD)

- 10^10: saving their life while preserving psychological integrity

- 10^11: giving them a permanent upward shift in baseline wellbeing and quality of consciousness, such as advanced contemplative practice can do over the course of decades

- 10^12: preventing cluster headaches for life

Why 10^12? A single cluster headache attack is plausibly in the 10^9 to 10^11 range of negative valence – orders of magnitude worse than migraine, worse than childbirth, worse than even torture. A typical patient experiences thousands of these across their lifetime. The multiplication is straightforward.

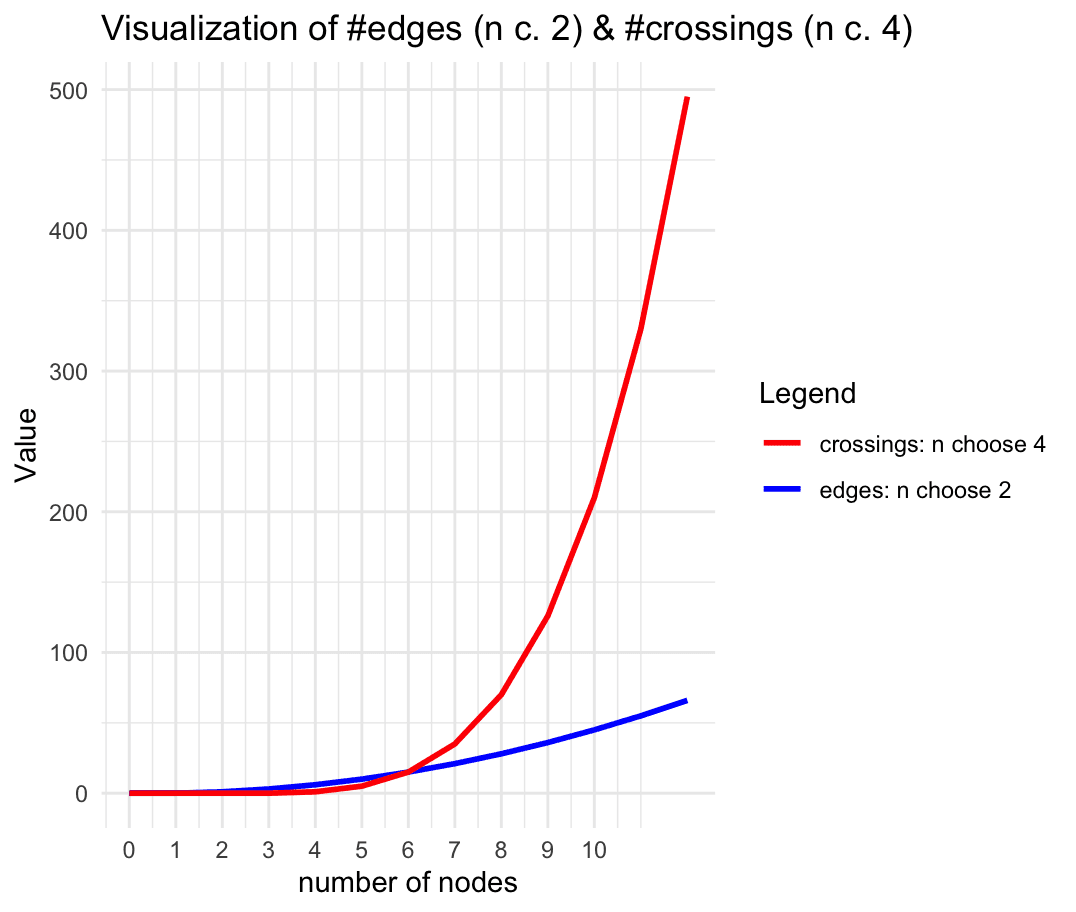

We’ve done empirical work quantifying cluster headache intensity using patient self-reports, cross-condition comparisons, suicide attempt rates, and other methods. Full details in our EA Forum posts (Quantifying the Global Burden of Extreme Pain from Cluster Headaches, Logarithmic Scales of Pleasure and Pain) and our Nature: HSSC paper.

The theory of change for the open letters on ClusterFree is straightforward:

Patient testimonials – Raw evidence that DMT/psilocybin (even at subhallucinogenic doses) works for a large fraction of sufferers, spreading organically through desperate communities. This is already happening underground.

Reputation-Amplified Legitimization – Get enough credible voices (clinicians, researchers, policy experts) publicly acknowledging both the crisis and the evidence. We already have 800+ signatures, many from extremely prestigious people. This shifts what’s discussable. Journalists cover it differently. Clinicians stop whispering with fear of judgment and start preparing, even if quietly at first (I’m already seeing signs of this in some groups).

Clinical cascade – One major medical center runs a supervised protocol, publishes clean results, and every other institution’s liability math inverts. You don’t need consensus. You need one proof point, and the dominoes fall.

3. It’s Insurance Against Your Own Extreme Suffering Being Dismissed

Cluster headaches are invisible. No blood, no broken bones, nothing on medical imaging. Just someone screaming, rocking, punching walls while doctors tell them to “try reducing stress”, “have you considered yoga?”, or “maybe try an Ibuprofen?”.

This is what illegible suffering looks like. People don’t believe you. Institutions can’t help you. You’re trapped in a cage of agony that no one else can see.

Supporting work on illegible suffering means supporting the principle that intense subjective experience matters even when it can’t be measured easily. By supporting ClusterFree, you’re building the world where, if you ever wind up in incomprehensible pain (chronic illness, treatment-resistant conditions, novel syndromes medicine doesn’t understand yet, a hard-to-communicate and hard-to-alleviate pocket of deep biopsychosocial suffering), people will actually take it seriously. Where “I am in agony, and this helps” is treated as highly important data, the existence is safer and more dignified.

Medical, institutional, and social gatekeeping kills people. It traps them in years of unnecessary suffering because the safe and affordable tools that work aren’t “approved” yet. By supporting the patient-driven, evidence-based access to what actually helps, you’re contributing to practical moral betterment and making the world safer for everyone who might need it. Including you.

4. It’s a Proof-of-Concept for Valence-First Cost-Effectiveness

Most effective altruism uses QALYs (Quality-Adjusted Life Years) or DALYs (Disability-Adjusted Life Years) to evaluate interventions. These metrics have a major limitation: they systematically underweight extreme suffering. A QALY-based analysis of cluster headaches captures some utility loss but misses orders of magnitude of suffering because attacks are brief and non-lethal – even though they’re torture-level and recurring. The frequency distribution is also extremely skewed (some sufferers have 10+ attacks daily), which standard health economics frameworks struggle to properly account for.

ClusterFree evaluates interventions based on how bad things actually feel and what their actual prevalence is – not through the lens of reduced life expectancy or economic burden: “How much suffering are we preventing when measured by its actual intensity?”.

We’ve quantified cluster headache intensity and prevalence using patient self-reports, cross-condition comparisons, suicide attempt rates, and other complementary empirical methods. The result is clear: cluster headaches score astronomically high. This is why preventing them matters so much more than conventional metrics would suggest.

If you want a future where we optimize for the real reduction of suffering instead of metrics that structurally and systematically ignore its most intense forms, ClusterFree is the seed. We’re showing how you can make rigorous, evidence-based decisions by taking the actual experience seriously. This serves as a template for charity evaluation and ethical triage (not necessarily to replace current Effective Altruism methods, but to add a _critical_ missing evaluation angle to the ensemble model for how to help most effectively).

5. You’ll Be in the Company of Intellectual Giants

Scott Alexander supports this. Anders Sandberg supports this. Peter Singer supports this. These are thought leaders with decades of track records in rigorous, scout-mindset thinking about doing good. They don’t endorse lightly. They’ve looked at the testimonials, the statistics and trends, the theory of change, and said: this is real.

If you trust their epistemics even a little, their endorsement is strong Bayesian evidence. These aren’t people chasing trends or optimizing for social approval.

And beyond the rationalist/EA sphere? Robin Carhart-Harris supports this – one of the leading psychedelic neuroscientists in the world. Shamil Chandaria supports this – doing serious work on meditation, predictive processing, and contemplative neuroscience. Christopher H. Gottschalk supports this – a neurologist who actually treats cluster headache patients and knows firsthand how devastating they are.

EA thinkers, psychedelic researchers, clinical neurologists, contemplative scientists – they’re all saying the same thing. That doesn’t happen often.

You get to join this coalition early. While it’s still underrecognized. While it requires actually engaging with the arguments instead of following the consensus. While supporting it means skin in the game.

Supporting ClusterFree now signals good taste (you can spot high-impact opportunities before they’re obvious), high reasoning capacity (you can evaluate complex arguments across disciplines), genuine compassion (you care about actual suffering, not just legible causes), and epistemic independence (you can disagree with the consensus when the evidence demands it).

When this becomes mainstream (and it will), you were there first.

6. It’s Creating a Schelling Point for Serious Suffering-Reduction Work

ClusterFree is reducing the coordination costs and bringing together people who can spot neglected pools of immense value early on.

Researchers who care about phenomenological intensity. Clinicians frustrated with institutional gatekeeping who want evidence-based psychedelic medicine. Policymakers who understand regulatory strategy. Patients with direct experience who want to help others. All working on the same thing with a clear theory of change.

Many causes tend to be either too vague (“reduce suffering”) or too narrow (“fund this one study”). ClusterFree hits the sweet spot – it is specific enough to be actionable, broad enough to matter at scale, and legible enough to attract serious supporters.

The network effects compound. When the next high-leverage suffering reduction project comes along, there’s already a group of competent people who know how to execute. The people showing up now will co-build what comes next. Rather than funding one project, you’re seeding a network that keeps generating high-impact work.

7. It’s a Strike Against Paternalistic Control Over Suffering Relief

Right now, people with cluster headaches are told they cannot officially access psilocybin or DMT – the interventions that consistently, rapidly, and reliably work for a large fraction of sufferers – because the institutions have decided they’re not allowed to make that informed choice. Even when they’re screaming in agony. Even when they’re suicidal. Even when nothing else helps.

Medical paternalism is at its most cruel when patients hear: “We know you’re suffering, but you can’t have the effective, affordable, and safe-to-manage thing that stops your agony, because we haven’t finished the proper studies yet, and/or because of the system’s inertia.” Never mind that converging evidence shows it works. Never mind that patients are already using it skilfully and reporting dramatic relief. Never mind that the risk profile is more than worth it given the suffering prevented.

ClusterFree, with your support, is building the legal, scientific, and social infrastructure to challenge that amoral status quo. We pave the way for informed consent, supervised access, and letting people make rational decisions about their own unbearable pain.

If you value bodily autonomy, participatory medicine, and the right to pursue relief from extreme suffering, this is the fight. And it’s winnable thanks to multiple predictors of success.

8. This Is Actually Tractable

Most extreme suffering feels impossibly hard to address. Oftentimes, contemplating extreme suffering causes a sense of helplessness. It’s too big, too entrenched, and too complex. You can care deeply and still feel like there is nothing you can meaningfully do about it.

Cluster headaches are different. We have video testimonials. We have 800+ signatures from people with institutional power. We have a clear mechanism – psilocybin/DMT abort attacks rapidly and safely. We have willing clinicians ready to run supervised protocols. We have patient demand already creating the underground adoption.

The main barrier is coordination and legitimacy-building. That’s where ClusterFree steps in: we close the gap between common knowledge and the rollout of systemic solutions.

And we’re going beyond mere advocacy. Bob Wold of ClusterBusters calls DMT a “breakthrough therapy” for its near-instant pain relief; we’re working to understand why it works, so we can foster next best steps. Our research includes exploring legal, non-hallucinogenic (or only mildly hallucinogenic) alternatives like 5-MeO-DALT, which one patient discovered in Shulgin’s TIHKAL and used to successfully treat 46 cluster headache patients. Developing targeted therapies based on understanding the mechanisms and testing new approaches translates into accessibility and effectiveness.

We (admittedly optimistically) believe this is doable within 3 to 5 years of focused and effective execution: build the coalition, get one major medical center to publish clean results, and watch the common knowledge cascade. Meanwhile, we’re already developing better treatments with maximally broad legal adoption.

Most things that matter this much take decades… or never even happen. This one is actually within reach.

9. Every Month of Delay Means Unnecessary Pits of Suffering

Right now, while you’re reading this, ~70,000 people are experiencing a cluster headache attack. More will start in the next few minutes. And more after that, like a global wave of agonizing pain.

Roughly 3 million people worldwide have cluster headaches in any given year. Many experience attacks daily or multiple times per week during the cluster periods. We estimate that globally, cluster headache patients spend approximately 70,670 person-years per year in pain, with about 8,570 person-years (about 3.1 million person-days) spent at extreme pain levels (≥9/10).

The math is brutal: with every month of delay, patients undergo millions of preventable torture-level attacks. While other cause areas and interventions may warrant dilemmas of donating now or later, the case of ClusterFree is urgently clear – donate now, and we will do our best at bringing unimaginable counterfactual relief to millions in 2026-2027.

Our model is designed for speed – we are not waiting for perfect RCTs, commercial products, or stable institutional consensus. We are building the strategic legitimacy cascade that lets institutions act on what we already know.

The suffering is happening right now. The effective solution exists right now. We know how to connect the dots, and the only question is how fast we can do so.

10. ClusterFree Is Accelerating an Already Developing Movement

ClusterBusters has been doing heroic work for years, building community, sharing information, and giving people hope. The psychedelic renaissance has been shifting cultural and scientific attitudes. Various researchers and advocates have been pushing this forward through different channels.

ClusterFree adds a specific piece: demonstrating that this is a winnable fight right now.

We bring:

- An explicit theory of change (testimonials lead to reputation-amplified legitimization, which leads to clinical cascade);

- 800+ signatures from outstanding individuals, many with institutional power and cultural influence;

- A straightforward narrative: “this is effective, safe, and urgent, and we can scale this legally” – and we’re not afraid to signal DMT as especially promising (due to its extremely fast pain relief profile when “vaped” at the onset of an attack);

- Coordination infrastructure that connects patients, clinicians, researchers, and funders around a shared goal; and

- A global but local-context-sensitive approach in both coverage and mindset: while ClusterBusters focuses on the U.S. and UK, we’re building parallel advocacy tracks across multiple jurisdictions (Canada, Europe, Latin America, etc.) to build the missing capacity.

This strategy acts synergistically with other approaches, de-risking them rather than obstructing them. When a major medical center decides to run a supervised protocol, they will do it in an environment where 800+ credible voices (as of December 13th 2025) have already confirmed that this is real, this matters, and the research must take place as soon as possible.

Our strategy is being developed and executed by uniquely talented individuals with a strong track record. Alfredo Parra leads the organization – he is exceptional at navigating the interface between institutions, has 7+ years of nonprofit management experience, and is provingly extremely conscientious and high-integrity (don’t take my word for it – look at all the work). The team and the community that seeded it concentrate people who simultaneously understand the importance of suffering reduction, psychedelic phenomenology, regulatory strategy, and movement building. They both care about the deep structure of consciousness and aren’t swayed by common narratives. This is a rare comparative advantage, and in our view, proves an excellent fit to push this cause forward.

The fruitful work has been happening already. Where we step in is providing leverage at a specific bottleneck: making the path to legitimacy visible and coordinated.

11. If You Take “Karma” Seriously, Look at What the Texts Say About Headache Relief

In the Bodhicaryāvatāra, Śāntideva teaches that “immeasurable merit” arises even from the simple thought: “Let me dispel the headaches of beings.” The tradition treats this literally. Not metaphorically. Relieving sharp, overwhelming pain generates outsized karmic effects because it interrupts some of the most intense forms of duḥkha in the human realm.

Why headaches specifically? Because they were considered the archetype of piercing, mind-breaking pain in the classical world. Cluster headaches exceed even that ancient benchmark. They represent some of the most unbearable moments a human mind can experience.

The logic of meritorious karmic logic is clear: if intention aligned with the relief of severe suffering produces merit that scales with the intensity of dukkha relieved, then work that prevents torture-level pain for thousands of people is not ordinary charity but a high-density, boutique, ultra-rare karmic investment.

For practitioners of the Bodhisattva path, karma constitutes a feedback loop shaping future clarity, opportunity, and awakening. Helping beings escape states of extreme pain is singled out across the Mahāyāna as one of the fastest ways to accumulate merit and purify obscurations.

If even contemplating the wish to relieve a single headache creates immeasurable merit, then actively supporting work that may end this class of suffering at scale plants karmic seeds that ripple across lifetimes.

Even if you hold a weak, naturalized version of karma (something like “intentions to help tend to produce good outcomes proportional to the good intended”), the efficiency here is absurdly high. Instead of helping someone have a slightly better day, you’re preventing thousands of hours of above-torture-level pain per person.

And what if you don’t believe in karma at all? The consequentialist case is still clear. You’re preventing, say, ~10^12 units of negative valence per person.

12. You Get the Bodhisattva-Tier Vision

Most people, when they look into the true darkness of suffering (the worst pain imaginable, sustained for hours, recurring for decades), recoil. They look away. They rationalize (“someone else will handle it”), they cope (“well, suffering is just part of life”), and freeze (“I can’t do anything about this anyway”).

Such reactions are understandable given the limits of our agency and the scope of the challenge. Luckily, there’s another response possible and available today:

You see it, and you roll up your sleeves. Where others flinch or cope, you take intentional action.

That capacity to clearly perceive the worst of what’s real and respond with competence, care, direction, and focus – rather than despair, avoidance, denial, or freezing – is a rare gem. It separates people who talk about compassion from people who enact it. The “Bodhisattva move” is: “I see the suffering. I will not turn away. I will do what needs to be done.”

Supporting ClusterFree strengthens that moral muscle. It’s a practice for the kind of person you may want to be: someone who can look into the darkest abyss and respond with pragmatism, not platitudes.

And a bonus reason for Qualia Computing readers…

So I Can Stop Talking About Cluster Headaches in Qualia Computing

Look, I very deeply care about this work, and this is why ClusterFree needs to claim its own space. QRI has a complementary mission to fulfill – studying and utilizing coupling kernels, topological approaches to the boundary problem, neural annealing frameworks, and the deep structure of valence.

The more ClusterFree is funded and self-sufficient, the more I can get back to the core theoretical work for which I’m best suited. Which, by the way, is exactly how we identify the next high-leverage suffering reduction opportunities!.

If you want me to shut up about cluster headaches and get back to talking for hours about beam-splitter holography and DMT phenomenology, the fastest way to make that happen is to generously fund ClusterFree.

You’re welcome.

What We’re Specifically Asking For

ClusterFree is currently a two-person operation: Alfredo leading the day-to-day execution (coalition building, clinical coordination, policy navigation, the 800+ signature campaign), and me providing strategic direction, research frameworks, writeups like this one, and QRI infrastructure. The initial donations will let us hire additional top talent to manage critical workstreams, so that we can:

- Pursue parallel regulatory tracks in different jurisdictions;

- Optimize our media presence by talking to journalists, podcasters, and medical journals;

- Build global partnerships with patient organizations, headache centers, psychedelic advocacy groups, and retreat centers that treat this and related conditions;

- Coordinate with medical centers willing to run supervised trials;

- Create high-quality topical resources for patients in multiple languages, which are scarce and difficult to find; and

- Pursue other high-impact value streams we’re ready to launch with additional capacity.

If significant funding is obtained, it will allow us to personally visit retreat centers and bring people with cluster headaches to suitable settings where they can experiment with these therapies, and where we can study them thanks to the QRI approaches to systematic phenomenology mapping, including EEG and biorhythms monitoring. This might turn out to be really important, possibly allowing us to determine what aspect of psilocybin/DMT relieves the pain. Our working assumption, based on many interviews with sufferers, is that DMT’s “body vibration” effect is key for its pain relief – if true, this is something we could significantly optimize by developing more targeted therapies.

While our network of volunteers is growing (see Slack below), having dedicated paid staff accelerates our efforts dramatically. The faster we move, the louder we say “no” to overlooked suffering.

Can’t Donate But Want to Help?

There are many high-impact ways to contribute beyond financial support:

- Sign the open letter – Adding your name increases our legitimacy and helps shift the Overton window.

- Share patient testimonials – If you have cluster headaches and have used psychedelics, your story can help build the evidence base. We believe that video testimonials from sufferers, in particular, are especially powerful. Recordings showing the moment itself where psilocybin/DMT relieves the suffering in real time might have the most emotional resonance overall.

- Join our Slack – We list simple but high-impact volunteer tasks (translations, social media, research assistance, essay feedback, etc).

- Connect us with key people – Do you know journalists, podcasters, clinicians, policy makers, or potential donors? Introductions are greatly appreciated!

- Spread the word – Share this essay, talk about cluster headaches with the right mood, and become the relieving change you want to see and experience in the world.

Conclusion

With all these reasons in mind, ClusterFree satisfies the utilitarian, the virtue ethicist, the long-term strategist, the person who wants meaning, the person who values courage, the person who wants to accumulate spiritual merit, the person who wants to bring these therapies to the FDA approval status, the person who just wants to see real humans stop screaming in pain, and the one who embodies all these motivations simultaneously.

Donate to QRI (the incubator organization that made this possible, and conducts more aligned efforts)

Our internal coalitions can agree that this matters, and we can actually do it. Thank you.

Acknowledgments: Many thanks to Marcin Kowrygo for his generous edits of the draft. Thanks to Chris Percy, Roberto Goizueta, Hunter Meyer, and, of course, Alfredo Parra for relevant discussions and suggestions for this write-up. Huge thanks to the ClustersBusters team for their incredible and ethically urgent work (and generosity with their time to help people in need, as well as accepting being interviewed in a pinch at Psychedelic Science 2025). Thanks to Jonathan Leighton (OPIS) for inspiration, aligned work, and fighting the good fight! Thanks to Jessica Khurana (and her team) for founding Eleusina Retreat – the world’s only retreat center focused on using psychedelics, legally, for treating extreme pain conditions. Thanks to Maggie Wassinge for her copious emotional support, love, and motivation to keep doing the real work, even when it feels hopeless at times (seriously, THANK YOU). And to the spirit of Anders Amelin (RIP), who is always with us, encouraging and motivating, giving us strength and intelligence. May he rest in peace, knowing we’re pursuing our ambitious suffering-reducing goals <3 And thanks to the entire QRI team, as well as the broader qualia community at large, for creating a container where these ideas can be freely explored with curiosity and without stigma. And finally, thanks to all of the donors of QRI and ClusterFree: we will do what we can to make you proud of supporting us. Metta!

[1] On the 10^12 estimate: This is admittedly a back-of-the-envelope calculation, but here’s the reasoning. A cluster headache patient might experience anywhere from 3,000 attacks (conservative, successful treatment) to 30,000+ attacks (severe chronic cases) over their lifetime. Using a conservative estimate of 3,000 attacks averaging ~60 minutes (3,600 seconds) each gives us ~10^7 seconds of extreme pain. Now for the intensity ladder. Holding a door open might prevent ~0.1 units of discomfort, using a pinprick as 1 unit. Kidney stones, already rated 10/10 on standard pain scales, are plausibly ~1,000× more intense than a pinprick (10^3). Each second of cluster headache pain appears to be ~10× worse than kidney stones (10^4 relative to our baseline). Multiply by 10^7 seconds, and we get 10^11 from pure hedonic intensity alone. Additionally, cluster headaches impose a constant inter-ictal burden (meaning, the suffering between attacks), including PTSD, anticipatory anxiety, and a profound sense of doom between attacks (see interview with Cluster Busters founders at 53:10-53:40). This could add a 2-5X multiplier, bringing us to ~10^12. For severe cases with 10× more attacks, the calculation easily reaches 10^13 or higher. The true value likely ranges between 10^7 (very mild cases with effective treatment) and 10^16 (severe chronic cases accounting for peak intensities and suffering between attacks). Even at the conservative end, preventing cluster headaches for life remains one of the highest-impact interventions accessible to individuals. Similar back-of-the-envelope calculations can be done to put in perspective each of the steps on the “logarithmic scale of help you can provide to someone”.

Scott Alexander in “Links For December 2024” (Dec 24 2025):

13: Alfredo Parra of Qualia Research Institute on cluster headaches. Cluster headaches are plausibly the most painful medical condition. If you ask a cluster patient to rate their pain, they’ll almost always say 10/10. Does that mean the headaches are twice as painful as a 5/10 condition? There are some philosophical reasons to expect pain to be logarithmic, so plausibly cluster headaches could be orders of magnitude more painful than the average condition. Once you internalize that possibility, it throws a wrench into normal QALY ratings and suggests that, even though cluster headaches are pretty rare, they might cause a substantial portion of the global burden of disease (or even a substantial portion of the suffering in the world). Some psychedelics, especially psilocybin and DMT, seem to treat cluster headaches very effectively, so the more you believe this reanalysis, the more interested you should be in figuring out how to turn these into an accessible therapy (see clusterbusters for more information on this aspect).

And more recently in “Open Thread 409” (Nov 24 2025):

2: Qualia Research Institute announces their spinoff effort ClusterFree. Cluster headaches (aka “suicide headaches”) are probably the most painful medical condition known to science, which makes them a natural priority for some utilitarians. They seem to be extremely treatable by psychedelics like psilocybin and DMT (including sub-hallucinogenic doses), so ClusterFree is working on getting governments to research this further and maybe get these drugs into the medical pipeline (cf. ketamine for depression). There’s an open letter here, and you can contact them here. The information for patients is at the bottom of this page.

Peter Singer in his recent piece “The Best Treatment for the Most Painful Medical Condition Is Illegal” (Dec 11 2025):

A recent article in Nature: Humanities and Social Science Communications found the funding provided in the United Kingdom for research on cluster headaches to be “orders of magnitude” less than that provided for multiple sclerosis, a condition that affects a similar number of people. The authors conclude that, given that we regard the provision of anesthesia for surgery to be essential, we should also recognize relief for extreme pain as essential. Finding ways to do so should warrant the highest funding priority.

A new initiative called Clusterfree has launched global open letters calling on governments to provide legal access to psychedelics for people with cluster headache. I have signed, and I hope that you will, too.